Obeying the user to improve intranet search

Thoughts and conclusions from observing Google and website search across similar tasks

Over the last three months I have been testing the website of a very large multinational organisation. The test procedure was simple; starting on the organisation’s home page, people were given a task such as “Find the report on Acme services for 2016” and I then observed as they tried to solve ten such tasks. The testing used the Customer Carewords Task Performance Indicator methodology.

For a variety of reasons, search was the dominant tactic for solving the tasks. In fact over 80% of people chose to start to solve each task using search. This meant that I ended up watching 835 individual searches during the testing.

Each test started on the website home page of the organisation and 70% of the searches I watched were executed using website search, that is, the search engine provided by the organisation for searching their site. However, in the introduction to the testing participants were told that they could use external search engines to solve their tasks if they wanted to. Many people chose to do this and about 30% of the searches used Google (two participants used Bing to search; no other external engines were used). This made it possible to compare search behaviour between website search and Google.

People who used Google were much more likely to solve the tasks. The 30% of Google searchers accounted for 45% of task success, meaning you were about twice as likely to get the right answer if you relied on Google.

So much so obvious. With R&D spending of $8 billion in 2013, Google is pretty serious about delivering better search results. I won’t discuss the reasons why Google search is better (there are plenty of articles on that), instead I’m more concerned with the user experience of search and drawing some conclusions about how intranet search teams can improve user experience.

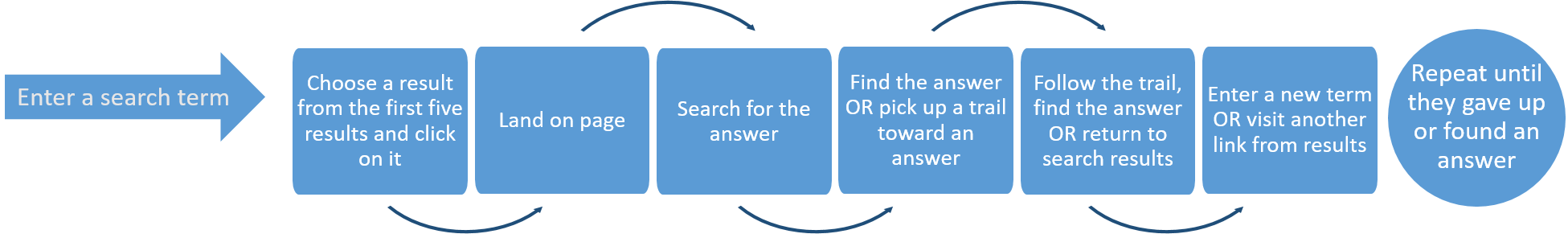

The first thing I observed was that user journeys using Google and website search followed the same basic pattern (with different outcomes of course).

Observing this pattern again and again, I drew one major, obvious conclusion, two connected conclusions and made one conceptual leap.

Major conclusion: Google has moulded search behaviour. People act like website search is the same as Google.

I observed no measurable differences in the way that people used Google versus their use of website search. Of course I could not tell what they were thinking as they searched, and there was no opportunity to follow up participants with questions about any decisions they were making, but what I observed was a homogeneous user journey (above) and a very low level of use of any advanced search features presented by website search. It seems reasonable to push this thinking a little further and add two further conclusions.

Two connected conclusions

1 People expect relevance

In the tests people expected relevant results to appear at the very top of the search results. People searched a lot; they persisted with search, changing terms, adding words, to try to get results that would help them solve the task. On average, people executed more than twenty searches during each test session over an hour. They did not (with very few exceptions) go beyond the first page of results. Google of course uses PageRank to count the number and quality of links to a page and determine a rough estimate of how relevant a page is to a search term. Using the power of the whole web PageRank means relevant results are pushed to the top. Website search (and enterprise search) are bounded by the organisation and do not have tools of similar effectiveness to PageRank to increase relevancy. In the testing, people needed to tune their own results using ‘advanced search’ or filters to increase relevancy. But people fully expected website search to deliver the same level of relevancy as Google – and filters, which would have helped, were used only a handful of times.

2 People expected precision from the first search

People commented a lot about the search results during testing

“Using the (website) search is impossible because the results often do not match what you want.”

“I used a lot of words in the search box – but only found an answer infrequently – could be better.”

Website search results brought the whole set of documents and pages with words from the search query. Results were comprehensive but lacking a meaningful hierarchy of relevance (in search literature this is called being good at recall and poor at precision and it’s a ubiquitous problem in enterprise search). Google, on the other hand, is dedicated to increasing search precision using at least 26 separate algorithms to provide accurate results. People expected website search to be like Google.

The conceptual leap

Employees using internal intranet search behave the same way as people using website search.

Improving intranet search user experience

Armed with this knowledge, what can be done to improve user experience of intranet search? An arms race with Google to engineer better relevance and precision seems like a lost cause; instead, teams should consider a number of ways that the search experience can be improved by leveraging the fact that users behave like their search is Google.

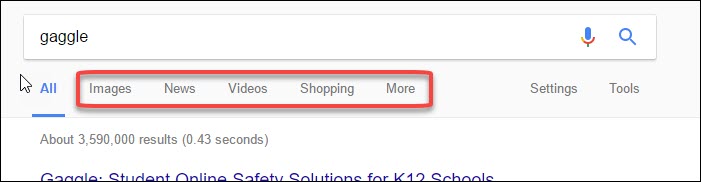

1 Present filters like Google

Presenting filters and advanced search features straight away at the side of the search results page might seem like an obvious good practice, but most people ignored the filters presented on the right hand side of the results page of website search in my study. Better to obey users expectation that filters are presented like Google, immediately below the search box, not in the left column or, even worse, the right column.

You can bet that this design is the result of research on the use of filters and it’s reasonable to assume that this way of filtering will be the way users expect to do it.

2 Present suggestions and best bets like Google

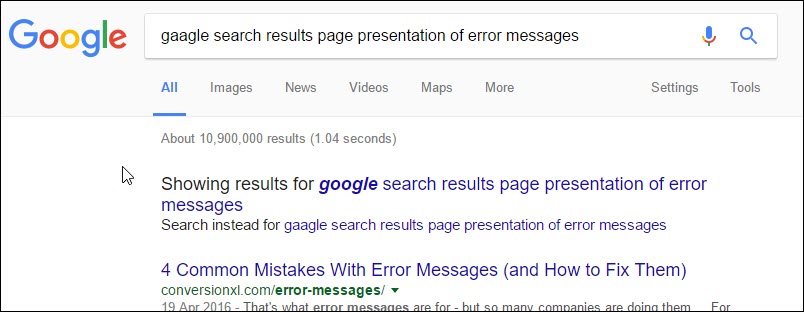

If you spell something wrong in Google you are presented with a spelling suggestion.

Experiment with presenting explicit messages in this way (exactly this way) on the second and successive attempts at search. This messaging feature could also be used for ‘best bets’ and suggestions based on easily available research on search logs.

3 Start with fewer results

Although strongly counterintuitive to many teams, this technique can drastically improve relevance. Instead of starting with search results that index everything on the site, remove some results from the initial results page.

This is the reverse of the usual process of enabling the user to turn on filters to restrict results (in my testing very few people used this feature), instead it starts by excluding whole sets of results (PDF, Excel or other document types). Crucially, you must allow users to add these back in if they want (again use Google’s message format on successive pages to do this).

Here we are, copying Google (at least in intention if not method) and their Penguin algorithm to exclude results that do not meet their standards

4 Improve titles

Of course, improving actual content is fraught with difficulty as it involves changing the practices of everyone who writes anything that is indexed by the search engine. Google, of course, has the market to do this for them (if you don’t follow the published guidelines on title and title meta tag then don’t expect to get found); a little SEO (Search Engine Optimisation) knowledge is necessary to get anywhere on the web. Achieving this kind of wholesale bottom-up change is very difficult, but by focussing purely on title and first lines of content you could get plenty of bangs for your bucks in improving both precision and relevance.

One thing is certain for intranet search; you will not out-perform Google, so if you can’t beat ‘em join ‘em.

This article was originally published on Linkedin Pulse.

Photo credit: Alan Stanton